Streamline Your AI Workflow for Better Prompt Management and Collaboration

Why AI Prompt Management is Essential for Modern Workflows.

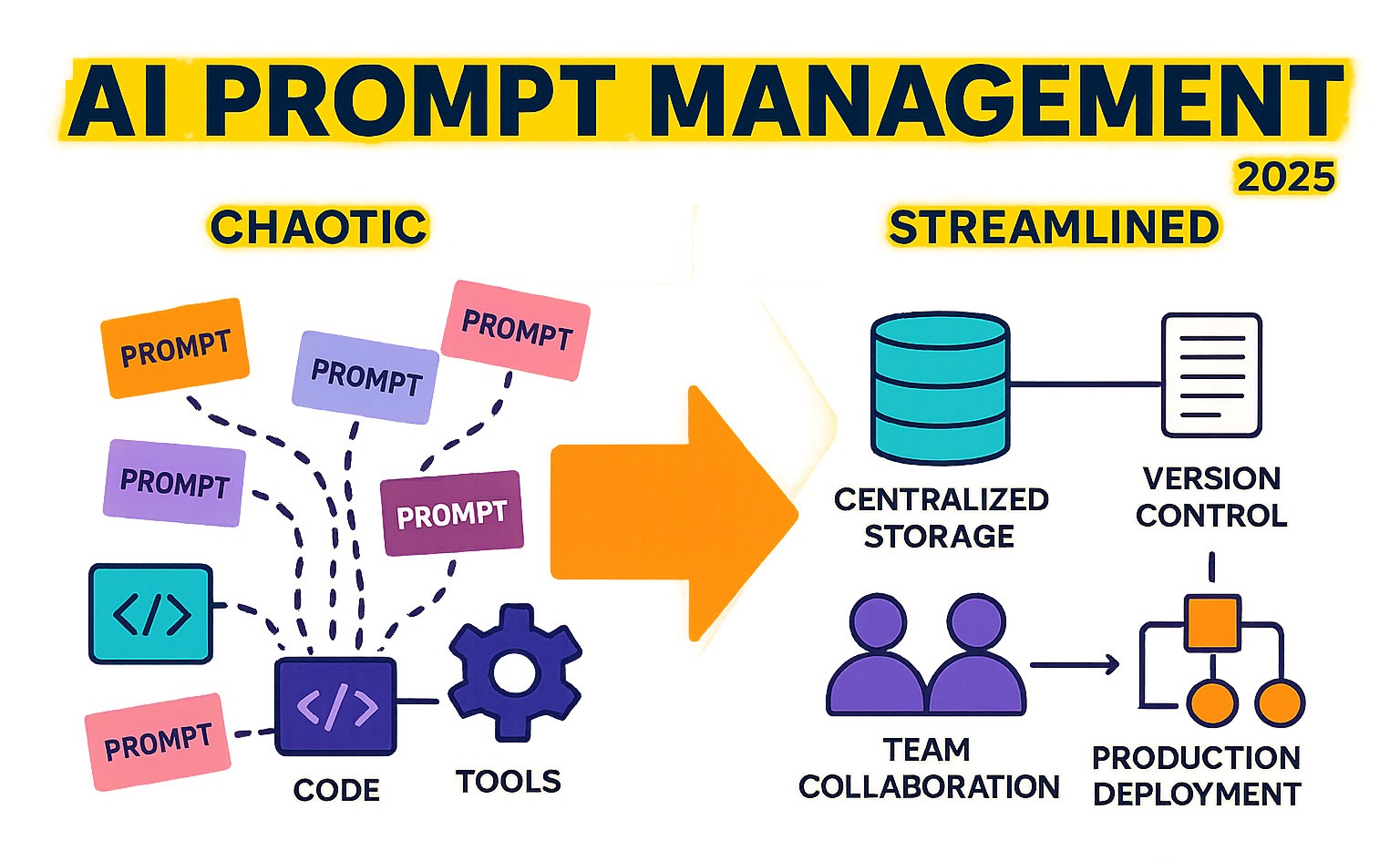

AI prompt management is the systematic approach to organizing, versioning, and deploying prompts across your AI applications. It transforms chaotic, scattered prompts into a streamlined, collaborative system that scales with your needs by providing version control, centralized storage, team collaboration, and environment management.

Your team has run successful AI pilots, and the results are promising. Now comes the challenging part: taking these proof-of-concepts into production. What started as a few hardcoded prompts has likely grown into dozens scattered across codebases, Slack threads, and spreadsheets.

As AI applications scale from experiments to enterprise-grade systems, managing prompts becomes a critical challenge. The difference between a successful AI deployment and a frustrating one often comes down to how well you manage the prompts that drive your models. Without a proper system, teams face scattered control, inconsistent outputs, and the inability to trace which prompt version caused a production issue. Knowledge workers already spend 2.5 hours per day searching for information—don't let your best prompts become part of that lost time.

What is Prompt Management and Why Is It Crucial?

Think of prompt engineering as being a talented chef who crafts amazing dishes. AI prompt management is the entire restaurant operation that ensures every dish is documented, tested, and consistently excellent. While prompt engineering focuses on crafting the perfect instructions, prompt management handles the entire lifecycle: storing prompts safely, updating them without breaking things, enabling team collaboration, and tracking what works in the real world.

This becomes crucial when moving from a quick experiment to a production application serving hundreds of customers. A few prompts hardcoded into your app work for a demo, but this casual approach creates major headaches at scale. You suddenly need reliability to prevent random answers, governance to ensure prompts are safe and appropriate, and traceability to know exactly which prompt caused a problem at 2 AM.

From Playground to Production: Overcoming Scaling Challenges

The journey from a cool demo to a dependable product often gets messy. Those few hardcoded prompts multiply, with different versions living in code, config files, or Slack messages. This chaos of scattered prompts means no one knows where the "real" version is. Updating a prompt becomes a detective hunt, and changing a single word can require a full application redeployment.

Debugging becomes a nightmare when you can't trace a bad output back to a specific prompt version. The lack of a single source of truth leads to inconsistent user experiences, and without automated rollback strategies, fixing a broken prompt is a high-stakes gamble. This is why systematic prompt management is essential for any AI application that needs to work reliably.

The Key Benefits of a Systematic Approach

Implementing proper AI prompt management brings tangible benefits that impact your bottom line and team happiness:

- Quality Control: Ensure consistent, reliable outputs that meet quality standards through review and testing.

- Cost Optimization: Track LLM usage to identify and optimize expensive, inefficient prompts.

- Performance Monitoring: Use real data on response times to make informed decisions about optimization.

- Reusability: Build a library of proven, high-performing prompts that your entire team can adapt and reuse.

- Versioning: Track prompt evolution, compare versions, and instantly roll back when a new version causes problems.

- Collaboration: Break down silos by allowing non-technical experts to contribute their knowledge without needing to code.

- Governance: Control who can create, modify, and deploy prompts, ensuring only approved versions reach production.

- Traceability: Connect every output to the specific prompt version that created it, simplifying debugging and optimization.

Common Mistakes to Avoid in Prompt Management

Watch out for these common pitfalls:

- Hardcoding Prompts: Convenient at first, but it ties prompt updates to your application deployment cycle, slowing down iteration.

- Skipping Version Control: Without a history of changes, you can't easily revert to a working version or understand how prompts have evolved.

- Limiting Collaboration: Restricting prompt editing to engineers cuts you off from valuable expertise from product, content, and customer-facing teams.

- Neglecting Metadata: A prompt is more than text; without its model settings, temperature, and context, reproducing results is nearly impossible.

- Lack of Observability: Flying blind without data on cost, latency, and quality makes it impossible to improve prompts systematically.

Core Strategies for Managing Prompts: From DIY to Dedicated Systems

The Spectrum of DIY Solutions

Most teams start with DIY methods because they are quick and cheap, but they often create technical debt as you grow.

- Inline Prompts in Code: The simplest method is to write prompts directly in your source code. It's fast for a proof-of-concept, but every minor tweak requires a full code redeployment, making collaboration with non-engineers impossible.

- Centralized Configuration Files: Moving prompts to JSON or YAML files is a step up. It decouples prompts from application logic but still ties updates to the code deployment cycle and isn't user-friendly for non-technical contributors.

- Custom Database Solution: Building your own database to store prompts offers dynamic updates without code deployments. However, this means you're building and maintaining an entire prompt management system from scratch, pulling valuable engineering resources away from your core product.

The Power of Dedicated Systems: The "Git for Prompts" Approach

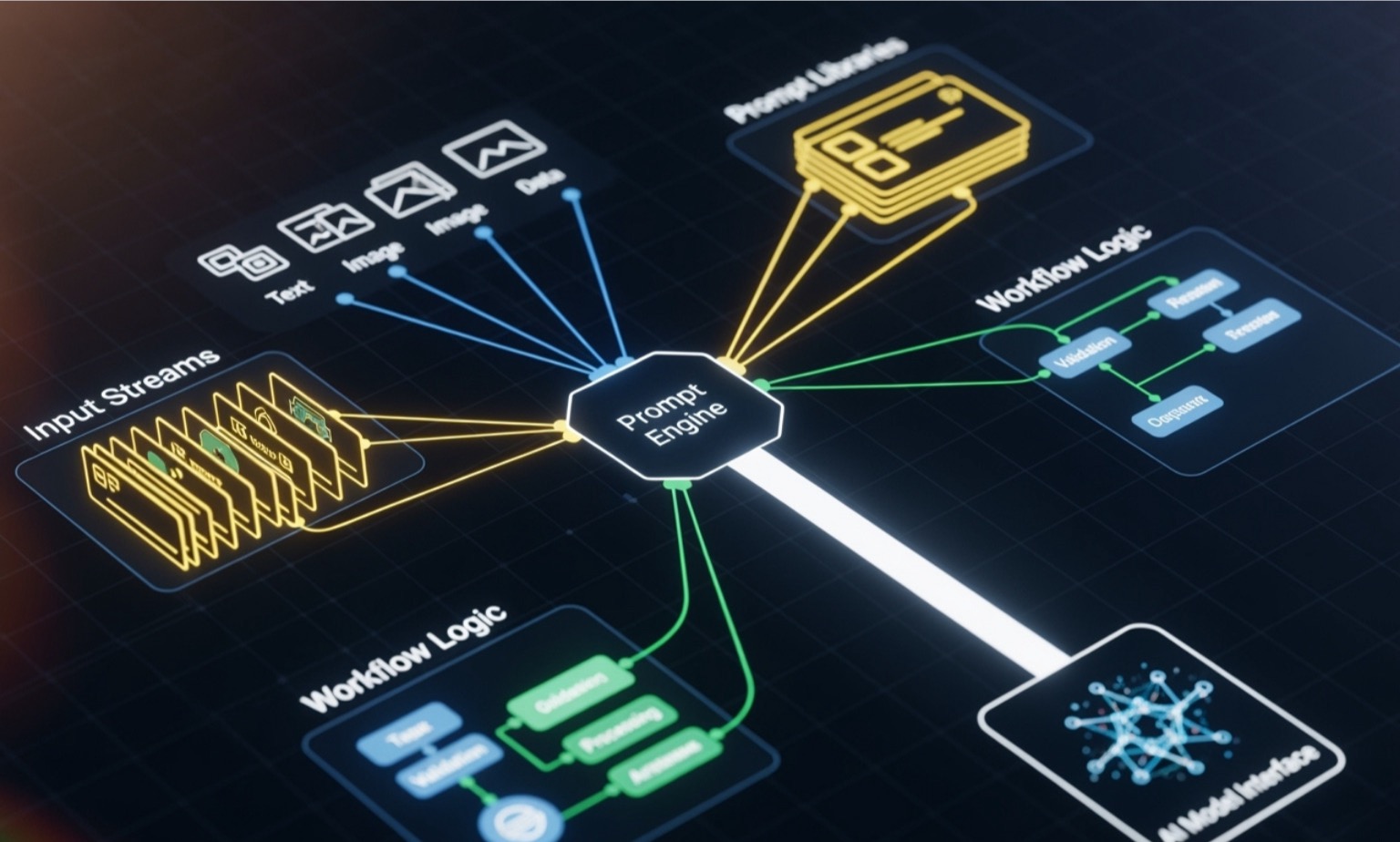

As applications mature, dedicated AI prompt management systems become essential. Think of them as "Git for prompts"—a version control system purpose-built for AI. These platforms decouple prompts from your application code, allowing non-technical team members to update, test, and deploy prompts through a user-friendly web interface without any engineering involvement.

These systems typically feature a web interface for visual prompt management and an API layer for programmatic integration with your applications. This combination provides the best of both worlds: business-user accessibility and technical robustness. A dedicated system offers:

- Centralized Storage: A single source of truth for all prompts.

- Robust Version Control: Automatic history tracking with diffs and one-click rollbacks.

- Team Collaboration: Features for sharing, commenting, and approval workflows.

- Environment Management: The ability to test prompts in dev/staging before deploying to production.

- Evaluation & Observability: Tools for A/B testing and dashboards for monitoring cost, latency, and quality in production.

| Feature | DIY Approach | Dedicated System |

|---|---|---|

| Scalability | Limited, requires manual effort | Designed for growth and complexity |

| Team Collaboration | Difficult, often relies on Slack or email | Built-in sharing, commenting, and approval workflows |

| Version Control | Manual or tied to code versioning | Automatic versioning with diffs and rollback |

| Security & Access | Basic, usually code-level permissions | Granular, role-based access controls |

| Testing & Evaluation | Manual, ad-hoc approaches | Integrated A/B testing and regression analysis |

| Performance Monitoring | Usually non-existent | Comprehensive cost, latency, and quality tracking |

| Maintenance Overhead | High, requires ongoing development | Low, managed by the platform provider |

| Deployment Speed | Slow, coupled with code releases | Fast, decoupled from application deployments |

The shift to a dedicated system transforms prompt engineering from a solo technical task into a collaborative team capability, dramatically improving the quality and speed of your AI development.

Key Features of an Effective AI Prompt Management System

When we're choosing an AI prompt management system, we're not just looking for a digital filing cabinet for our prompts. We need a comprehensive platform that nurtures our prompts through their entire journey—from that first spark of an idea to robust production monitoring.

Think of it like choosing a home for your growing family. You need the basics covered, but you also want room to grow and features that make daily life smoother.

Core Capabilities: Version Control, Collaboration, and Environments

These are the non-negotiable features that form the foundation of a robust system.

- Storing and Organizing: A centralized home for all your prompts, with tags or folders to make them easily searchable.

- Version Control: Every change should automatically create a new version. The system must provide clear "diff" comparisons between versions and allow for one-click rollbacks to a previous state. Many teams find success applying Semantic Versioning (e.g., X.Y.Z) to understand the significance of changes at a glance.

- Collaboration: Features like shared workspaces, comments, and approval workflows transform prompt engineering into a team sport, allowing content, product, and technical experts to contribute.

- Environment Management: The ability to test and validate prompts in development and staging environments before deploying to production prevents untested changes from breaking your live application.

Advanced Features: Evaluation, Observability, and Analytics

Great systems go beyond the basics to help you continuously improve your LLM applications.

- Evaluation Tools: These features answer the question, "Is this prompt actually better?" They include A/B testing to compare versions with live traffic, regression testing to catch unexpected changes in output, and backtesting to see how a new prompt would have performed on historical data.

- Performance Dashboards: These provide clarity on what's happening in production. Cost and latency tracking are especially critical, as they show the financial impact and speed of your prompts. Visualizing usage patterns and success rates helps you make data-driven decisions for optimization.

The Critical Role of Metadata and Traceability

Professional-grade systems treat metadata as a first-class citizen. This is the rich context that makes your prompts truly manageable.

- Metadata: This includes essential details like model parameters (temperature, token limits, model name), author information, and version tags that explain the "why" behind a change.

- Traceability: All this metadata feeds into traceability, your debugging superpower. When an issue occurs, you can trace an output back to the exact prompt version and its settings that generated it. This makes debugging straightforward, ensures reproducibility for testing, and creates the audit trails necessary for governance and compliance.

Fostering Collaboration and Integrating with LLMOps

The magic of AI prompt management truly shines when it breaks down silos and brings teams together. Gone are the days when prompt engineering was a lonely developer task hidden deep in code repositories. Today's most successful AI applications emerge from collaborative efforts that harness the collective wisdom of entire teams.

Empowering the Whole Team: Bridging Technical and Non-Technical Roles

The best prompt engineers are often the subject matter experts—the marketing manager who knows the brand voice, the support lead who understands user questions, or the legal expert who knows compliance requirements. Historically, these experts were locked out of the process, leading to slow, frustrating feedback cycles.

Modern prompt management systems change this with no-code prompt editors. These intuitive web interfaces allow non-technical team members to write, test, and refine prompts directly, without writing code. This shift reduces engineering bottlenecks, as teams can make immediate adjustments based on real-world feedback. When the people who best understand the desired outcome can directly shape the AI's instructions, the quality of the outputs improves dramatically.

Integrating with the Broader LLMOps and MLOps Ecosystem

For AI applications to scale reliably, prompt management must integrate with your existing development and operations infrastructure. This means treating prompts with the same rigor as application code.

- CI/CD for Prompts: This involves applying automated quality controls to your AI instructions. When a new prompt version is proposed, automated tests can run it against evaluation datasets to catch issues before they reach production.

- Programmatic Deployment: Modern systems integrate with your deployment pipelines via APIs, allowing your applications to pull the latest approved prompt versions automatically. This ensures consistency while maintaining the flexibility to roll back quickly.

- Framework Integration: These systems connect with popular tools like LangChain, allowing you to manage prompts within complex AI workflows using the same systematic, version-controlled approach.

By weaving AI prompt management into your broader LLMOps strategy, you build a foundation for AI applications that can grow, adapt, and improve continuously.

Frequently Asked Questions about Prompt Management

We hear these questions all the time from teams diving into AI prompt management. If you're wondering about any of these, you're in good company.

What's the first step to implementing prompt management?

The single most important first step is to decouple your prompts from your application code. Start by gathering all prompts scattered throughout your codebase and moving them into a centralized location, such as a single configuration file (JSON or YAML) or a simple database table. This step alone provides immediate visibility and makes updates vastly easier, as you no longer need to redeploy your entire application to tweak a prompt's wording.

How does prompt versioning actually work?

Prompt versioning works much like Git does for code. Every time you save a change to a prompt, the system automatically creates a new, unique version. This builds a complete history of every modification. You can then visually compare any two versions to see exactly what changed (a "diff") and, most importantly, perform an instant rollback. If a new prompt version performs poorly in production, you can revert to the previous working version with a single click, providing a critical safety net.

Can non-engineers really manage and deploy prompts?

Absolutely. This is a key benefit of modern prompt management systems. They provide intuitive, no-code web interfaces that feel like a content management system, not a developer tool. This empowers non-technical experts—like product managers, content strategists, and support leads—to edit, test, and deploy prompts without writing any code. This transforms prompt engineering from a solo technical task into a collaborative team effort, leading to faster iteration and higher-quality prompts shaped by domain expertise.

Conclusion

As we've explored throughout this guide, AI prompt management is far more than just keeping track of text strings—it's the backbone of any serious LLM application that needs to work reliably at scale. Think of it as the difference between a chaotic kitchen where recipes are scribbled on napkins versus a professional restaurant with organized, tested recipes that deliver consistent results every time.

We've seen how the journey from scattered, hardcoded prompts to a systematic approach transforms everything. Quality control becomes possible when you can track what's working and what isn't. Cost optimization happens naturally when you can see which prompts are burning through tokens unnecessarily. Collaboration flourishes when your product manager can tweak a prompt without waiting for an engineering deployment.

But here's what excites us most: we're witnessing prompt engineering evolve from a solo technical skill into a collaborative craft. The best prompts often come from the people who understand the problem best—your domain experts, content strategists, and product managers. When these folks can directly shape how your AI behaves, magic happens.

This shift represents the future of AI development. It's not just about engineers writing better code; it's about entire teams contributing their expertise to make AI applications truly intelligent and useful.

At Potions, we're passionate about making this collaborative future a reality. Our platform is designed as a community-powered AI prompt marketplace where the best ideas rise to the surface through collective intelligence. Every prompt gets automatic versioning and a stable URL, so you can experiment fearlessly knowing you can always trace back to what worked.

What makes us different? We believe in the power of remixing and building on each other's work. You can instantly search hundreds of proven prompts, adapt them for your specific needs, and contribute your improvements back to the community. It's like having a library of AI expertise that grows stronger with every contribution.

Ready to transform your AI prompt management from chaos to collaboration? Your team deserves better than scattered prompts and deployment bottlenecks.