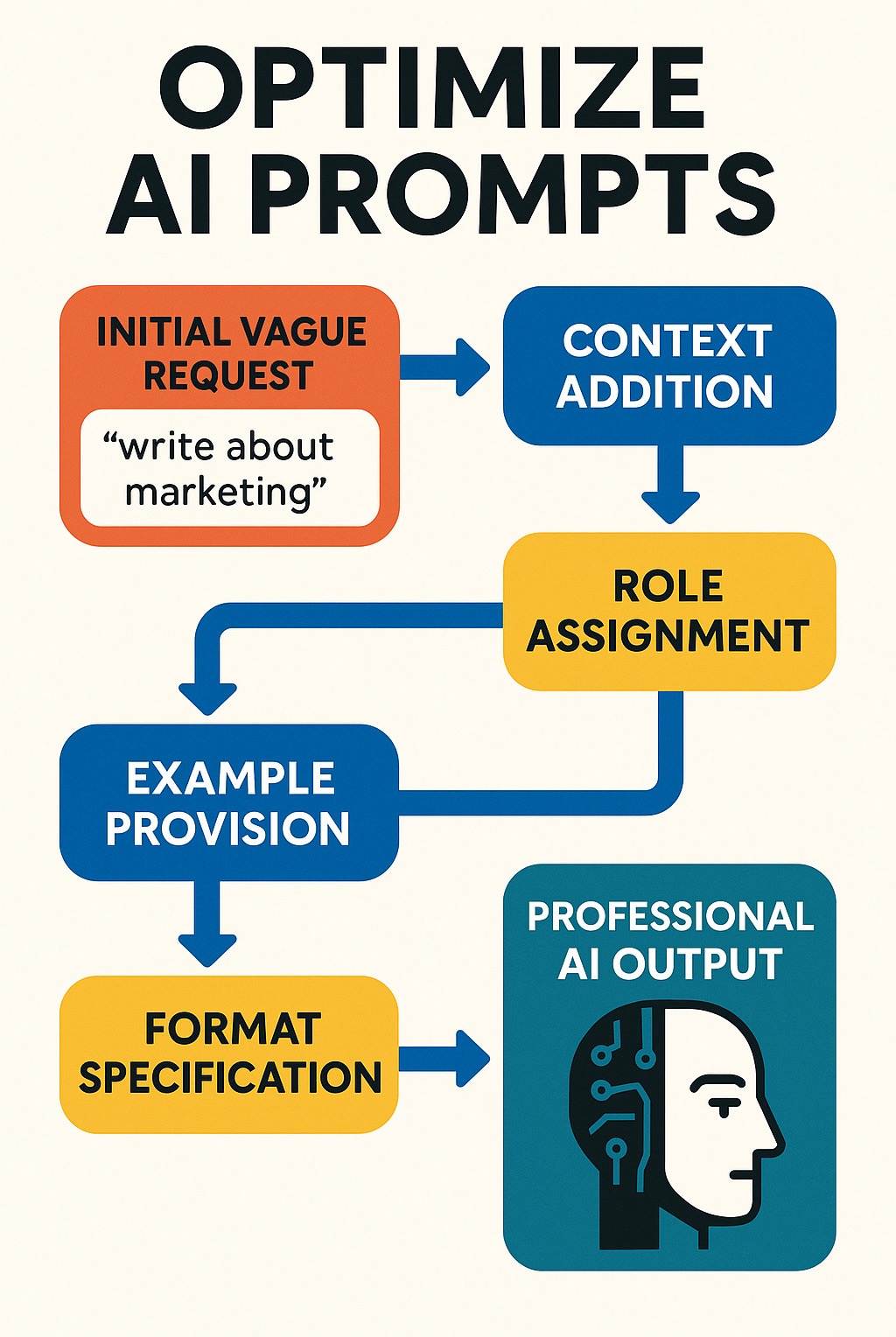

Beyond Basic: Master the Art of Optimizing AI Prompts

Why Your Prompts Need More Than a Polish.

Learning to optimize AI prompts can transform mediocre AI outputs into professional-grade results. Here's what prompt optimization actually involves:

Core Optimization Techniques:

- Clarity and Context - Use specific instructions with clear delimiters (###, """)

- Role Assignment - Give AI a persona like "expert data analyst" or "experienced copywriter"

- Few-Shot Examples - Show the AI exactly what you want with input-output pairs

- Structured Outputs - Request specific formats like JSON, tables, or numbered lists

Advanced Strategies:

- Chain-of-Thought - Ask AI to think step-by-step for complex problems

- RAG Integration - Connect AI to external data sources for current information

- ReAct Framework - Combine reasoning with real-time tool usage

Many professionals know they're leaving AI results on the table. You craft a prompt, get bland output, tweak it, and repeat until something decent emerges. The problem isn't the AI—it's that most people make common mistakes, like being too vague or skipping essential context.

Prompt optimization bridges the gap between human intent and machine understanding. It's the difference between asking "write about marketing" and crafting instructions that produce the exact marketing copy you need for a specific audience and goal.

When you optimize prompts properly, you get consistent, high-quality outputs on the first try, eliminating endless tweaking and generic responses.

The Anatomy of a Perfect Prompt: Core Optimization Techniques

Think of crafting prompts like having a conversation with a brilliant but very literal colleague. They know everything but need crystal-clear instructions to give you exactly what you want. When you optimize AI prompts, you're essentially learning to speak their language fluently.

Clarity and Context: Be Direct, Not Vague

AI is incredibly smart but can't read minds. Asking it to "write something good about dogs" yields generic fluff. Asking it to "write a heartwarming 200-word story about a rescue Golden Retriever learning to trust humans again, told from the dog's perspective" produces magic.

Specificity is key. Instead of hoping the AI guesses your intent, paint a detailed picture: define your audience, tone, length, and format. Instead of "make it engaging," get concrete: "write in a conversational tone for busy marketing managers who need actionable insights."

Context provides the necessary backstory. For email copy, specify if it's B2B or B2C, formal or casual. This background helps the AI craft responses that fit your situation.

Smart prompt writers use delimiters like ### or """ to separate instructions and prevent confusion:

### YOUR TASK ###

Summarize this article in three bullet points for busy executives.

### ARTICLE TEXT ###

[Your content here]

For complex requests, use task decomposition. Instead of asking for an entire marketing strategy, break it down: first ask for audience analysis, then competitor research, then specific tactics. This step-by-step approach delivers better results. You can dive deeper into these techniques with OpenAI's official prompt engineering guide.

Role-Playing: Assign a Persona for Expert Output

To get professional-quality output, give your AI a job title. This simple trick transforms generic responses into expertly crafted content. The difference is remarkable. Asking AI to "explain blockchain" yields a textbook definition. But asking it to "act as a patient tech consultant explaining blockchain to a small business owner" delivers clear, relevant, jargon-free explanations.

Role assignment gives AI context for tone, expertise, and perspective. Saying "you're a witty social media manager" makes the AI adjust its language and adopt the right voice.

This technique works across business needs. For marketing content, try "You're an experienced email marketer writing to busy professionals." For software development, use "You're a senior developer who excels at writing clean, well-documented code." The key is being specific about the persona's background and audience. Don't just say "expert"—say "seasoned consultant who helps startups scale." This detail helps AI adopt the perfect tone.

Provide Examples: Show, Don't Just Tell with Few-Shot Prompting

Sometimes explaining isn't enough—you need to show the AI what success looks like. This is where few-shot prompting becomes a secret weapon for consistent outputs.

While zero-shot prompting gives instructions without examples (e.g., "translate to French"), and one-shot prompting gives one, few-shot prompting provides multiple examples to teach the AI your exact pattern and style.

AI models excel at pattern recognition, so showing them 2-3 perfect examples helps them understand how you want content delivered.

Let's say you need product descriptions with a specific structure. Instead of describing your format requirements, show the AI:

Write product descriptions following this pattern:

Example 1 - Wireless Earbuds:

"Crystal-clear sound meets all-day comfort. Features noise cancellation, 8-hour battery, and sweat-proof design. Perfect for workouts and commutes. Order yours today!"

Example 2 - Smart Watch:

"Stay connected without missing a beat. Tracks fitness, receives notifications, and lasts 5 days per charge. Your health and productivity companion. Get it now!"

Now write for: Bluetooth Speaker with waterproof design, 12-hour battery, 360-degree sound

This approach eliminates guesswork and ensures every output matches your brand voice. The AI learns your pattern and applies it consistently, saving you from endless revisions. You can explore the research behind these techniques in this paper on zero-shot, one-shot and few-shot prompting explained.

Structure Your Output: Define the Desired Format

Getting a wall of text when you need organized data is frustrating. To truly optimize AI prompts, always specify your desired format.

Structured outputs make AI responses immediately useful. Need data for a spreadsheet? Request JSON format with specific field names. Building documentation? Ask for Markdown with proper headings. Want to compare options? Request a table with clear columns.

The magic is in the specifics. Instead of "make a list," try "create a numbered list with bold headings for each point." Instead of "organize this information," say "format as a table with columns for Feature, Benefit, and Cost."

Tables work beautifully for comparisons, and lists shine for step-by-step processes. For technical applications, JSON and XML formats let you feed AI outputs directly into other systems.

Think ahead about how you'll use the response. Will you copy-paste it, import it, or present it? Define the format that makes your next step effortless.

Advanced Strategies to Optimize AI Prompts for Complex Tasks

Once you've mastered the basics, it's time to tackle the really exciting stuff. These advanced techniques push AI beyond simple text generation into genuine problem-solving territory. Think of them as power tools for your AI toolkit—designed for those moments when you need the AI to think deeper, access current information, or even interact with other systems.

How to optimize AI prompts for complex reasoning using Chain-of-Thought (CoT)

Ever asked an AI a tricky math problem and gotten a confidently wrong answer? AI models sometimes jump to conclusions. Chain-of-Thought (CoT) prompting fixes this by asking the AI to think step-by-step.

Adding a simple phrase like "Let's think through this step-by-step" or "Show your work" makes the AI break down complex problems into manageable pieces. Instead of guessing at arithmetic, it walks through each calculation methodically.

This technique shines with multi-step problems involving arithmetic, logic puzzles, or complex reasoning. The transparency is a huge bonus: when the AI shows its reasoning, you can spot errors and adjust your prompts. Research shows this approach dramatically improves accuracy on complex tasks, as detailed in this Chain-of-thought prompting research.

How to optimize AI prompts for factual accuracy using Retrieval-Augmented Generation (RAG)

AI's biggest weakness is its knowledge cutoff date. Ask about recent news or your company's latest policies, and you'll get outdated information or confident-sounding fiction (hallucinations). Retrieval-Augmented Generation (RAG) solves this by connecting your AI to external knowledge sources.

The system first searches your chosen knowledge base—your company's internal documents, a news database, or industry resources—for relevant information. It then feeds that context to the AI along with your original question.

This transforms an unreliable AI into a trustworthy research tool. Your legal team can query case law, and your customer service can access current policies. The vector databases that power RAG understand meaning, not just keywords. Best of all, RAG-powered AI can cite its sources, so you can verify where information came from.

Want to dive deeper? Check out What is Retrieval Augmented Generation (RAG)? for a comprehensive overview.

The ReAct Framework: Combining Reasoning with Action

What if your AI could not only think but also act? The ReAct framework (Reason + Act) enables this, turning AI from a passive text generator into an active problem-solver.

The AI alternates between reasoning about what to do next and taking actions using external tools, like searching the web or calling an API for real-time data. It then reasons about those results to decide its next step.

Imagine asking, "What's the weather in Tokyo, and should I pack an umbrella?" A ReAct-enabled AI would first reason it needs current data, then call a weather API. Next, it would analyze the forecast and give you a practical recommendation. This real-time information retrieval is essential for planning and research.

The framework also enables building autonomous agents that complete complex, multi-step tasks with minimal human intervention. The technical details are fascinating if you want to explore further: ReAct framework research paper.

Other Evolving Techniques to Watch

The field moves fast, and other promising techniques are emerging to optimize AI prompts.

Self-Consistency generates several reasoning paths and picks the most common answer, like taking a consensus from experts. Tree of Thoughts expands on CoT by exploring multiple reasoning branches simultaneously, like strategic brainstorming. Meta-Prompting involves prompting the AI to improve its own prompts, teaching it to be a better version of itself. Finally, Self-reflection and Active-Prompt techniques encourage the AI to recognize uncertainty and ask clarifying questions instead of giving confident but wrong answers.

These cutting-edge techniques show where prompt optimization is heading: toward AI that actively collaborates in solving complex, real-world problems.

Common Pitfalls and How to Troubleshoot Your Prompts

Even the most experienced users run into problems when trying to optimize AI prompts. Most issues come down to communication problems that are surprisingly easy to fix.

Why is My AI Ignoring Instructions or Hallucinating?

It's frustrating when your AI ignores instructions or confidently invents facts (hallucinates).

When your AI won't follow instructions, your prompt is likely confusing. You might have buried key details or given conflicting directions. The fix is simple: put your most important instructions at the beginning or end of your prompt. Use clear separators like ### INSTRUCTION ### and break complex tasks into smaller pieces.

Hallucinations are trickier. The AI isn't lying; it's generating text that sounds plausible based on its training data, a problem that became widely reported with early AI chatbots hallucinating. To reduce them, lower the temperature setting to 0.2-0.3 (controlling creativity vs. factuality) and provide reliable source material instead of asking the AI to recall facts from memory.

Fixing Incorrect Formatting and Generic Responses

Getting bland, repetitive, or incorrectly formatted responses is common. This usually happens when prompts are too vague. Saying "rewrite this" often results in minor tweaks. Instead, be specific about the style, tone, and format you want. Try "rewrite this paragraph to sound more conversational and confident, using active voice."

Format problems are easy to solve. If you asked for a bulleted list and got a paragraph, the AI misunderstood. Always specify the exact format: "respond as a JSON object," "create a numbered list," or "use a table with three columns." Showing an example of your desired format is even better. Also, use negative constraints by being clear about what you don't want to further guide the AI.

Overcoming Refusals and Ethical Problems

Sometimes an AI will refuse a request, usually to follow safety guidelines or because it's confused. When you hit a refusal, try rephrasing your request or breaking the task into simpler steps. A clearer prompt can often get you unstuck.

AI bias is a more serious issue. Models learn from human-created content and can perpetuate harmful stereotypes. A striking example was when AI's racial blind spots became apparent after an AI altered a user's headshot to give her lighter skin. To combat bias, be explicit about wanting neutral, inclusive language. Ask for diverse perspectives when appropriate and always review AI outputs with a critical eye.

Managing Token Usage and Response Times

Behind every AI interaction are "tokens," which is how systems count and charge for processed text (both input and output). High token usage can be costly, especially with detailed prompts or techniques like Chain-of-Thought. While specificity is key, avoid unnecessarily wordy language. Get to the point without sacrificing clarity.

Slow responses usually mean your prompt is too complex or the system is busy. Try simplifying your context or breaking big tasks into smaller queries. For routine work, consider using faster, lighter models that can handle simpler tasks more efficiently.

The Future is Collaborative: Tools and Trends in Prompt Engineering

The world of prompt engineering is changing fast. What started as a solo craft of tweaking prompts until something worked is becoming something much more sophisticated and collaborative. We're moving beyond the days of endless trial and error toward smarter, automated ways to optimize AI prompts.

The Evolution from Manual Tweaking to Automated Optimization

The days of manually tweaking prompts are fading. Automated prompt optimization is changing how we work with AI, using machine learning to analyze prompts and spot patterns humans might miss.

Feedback-driven learning is leading this charge. Systems now learn from user reactions to adjust their responses. For example, one e-commerce company saw customer satisfaction jump 25% after implementing a system that refined chatbot prompts based on user feedback.

Reinforcement Learning from Human Feedback (RLHF) takes this further, as the AI learns from both successes and failures. The most exciting development may be self-improving agents that learn from every interaction, becoming more helpful over time. This shift means we can optimize AI prompts faster and more effectively than ever.

Why Prompt Engineering is Evolving, Not Disappearing

You might hear that prompt engineering will become obsolete as AI gets smarter, with some arguing that AI prompt engineering isn’t the future as a job role. However, prompt engineering isn't disappearing—it's evolving into something more strategic.

The core need to communicate human intent to AI systems will always remain. As AI becomes more capable, this communication becomes more important, not less. The focus is shifting from precise wording toward problem formulation—getting clear on what we want to achieve and guiding the AI strategically.

We're evolving from prompt writers to AI orchestrators, conducting an AI symphony rather than playing one instrument. This human-AI collaboration requires understanding how to direct AI's capabilities toward our goals.

Tools for Collaborative Prompting and Version Control

As prompt engineering becomes central to how we work, we need better tools to manage our prompts, much like software developers use Git to track code. The best prompt management platforms now offer powerful features like versioning prompts to save every change, A/B testing to compare approaches, and collaborative workspaces that turn prompt engineering into a team effort.

This is exactly why we built Potions. We believe the best prompts come from community collaboration, not isolated work. On Potions, you don't start from scratch; you build on the collective wisdom of other prompt engineers. You can find proven prompts, remix them for your needs, and contribute back to help others.

Every prompt gets automatic version control—we save every change and provide a stable URL, so you can experiment freely. This knowledge sharing creates a growing library of AI expertise, allowing us to collectively optimize AI prompts at a scale no individual could achieve alone.

Conclusion: From Prompt Engineer to AI Orchestrator

We've explored how to optimize AI prompts, from basics like clarity and context to advanced methods like Chain-of-Thought and RAG. We've also tackled common frustrations like ignored instructions and hallucinations.

Here's the key takeaway: the quality of your AI output mirrors the quality of your prompt. These powerful models aren't mind readers; they are pattern-matching systems that need clear guidance to shine.

Think of yourself as evolving into an AI orchestrator. You're no longer just asking questions; you're conducting a digital symphony to achieve a specific goal. Your ability to craft precise prompts is your superpower.

Prompt engineering is both art and science, requiring methodical testing and creative vision. This field moves fast, so continuous learning is essential. The most successful AI orchestrators stay curious, experiment boldly, and learn from both successes and failures.

The future belongs to those who master this collaborative dance between human creativity and machine capability. By embracing this process and connecting with others, you're positioning yourself at the forefront of a technological revolution.

Ready to take your AI collaboration to the next level? Explore and remix the world's best prompts on Potions and join a community that's collectively pushing the boundaries of what AI can achieve.