From Novice to Ninja: A Comprehensive Guide to Prompt Engineering

Why Every AI User Needs a Prompt Engineering Guide.

A prompt engineering guide is essential for anyone using AI tools like ChatGPT, Claude, or Gemini. It's the art and science of designing instructions for AI models to get the best possible outputs.

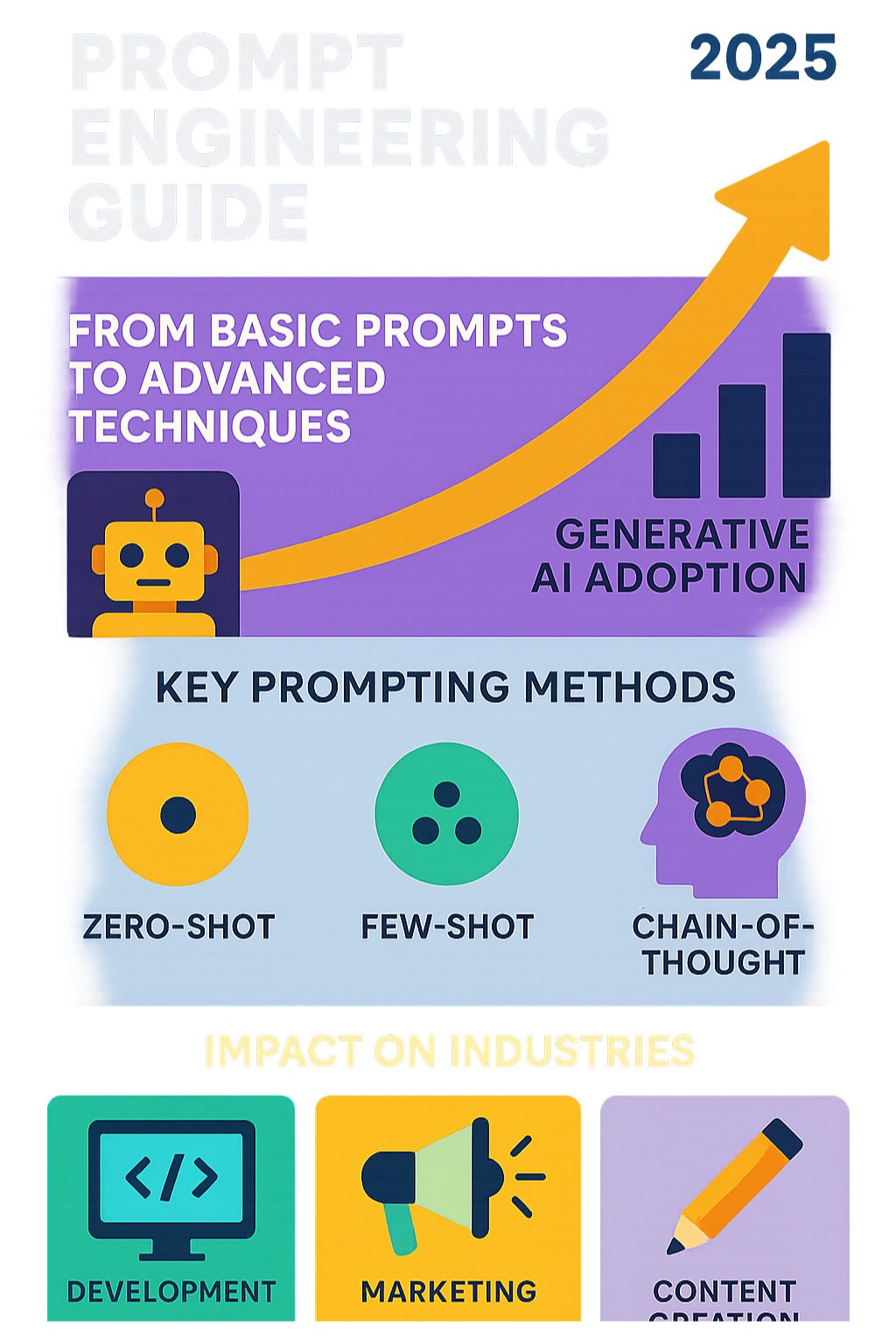

Quick Guide to Prompt Engineering Essentials:

- What it is: Crafting effective instructions for AI models.

- Why it matters: Turns vague AI responses into precise, useful outputs.

- Core components: Clear instructions, context, examples, and a defined output format.

- Key techniques: Zero-shot, few-shot, and chain-of-thought prompting.

- Best practices: Be specific, iterate, provide context, and validate results.

The rise of large language models means we now interact with computers using natural language instead of complex code. But there's a catch: the quality of your prompt directly determines the quality of the AI's response.

As one industry expert put it: "You don't need to be a data scientist or a machine learning engineer – everyone can write a prompt. However, crafting the most effective prompt can be complicated."

This skill has become essential for professionals across all industries. Whether you're a developer generating code, a marketer crafting campaigns, or a writer seeking creative inspiration, mastering prompt engineering opens up AI's true potential.

This guide will transform your trial-and-error approach into a systematic method for getting consistent, high-quality results from AI tools.

The Anatomy of a Perfect Prompt: Core Components and Best Practices

Think of a prompt as your conversation starter with an AI. Just like giving directions, the clearer and more specific your prompt, the better the AI understands what you want.

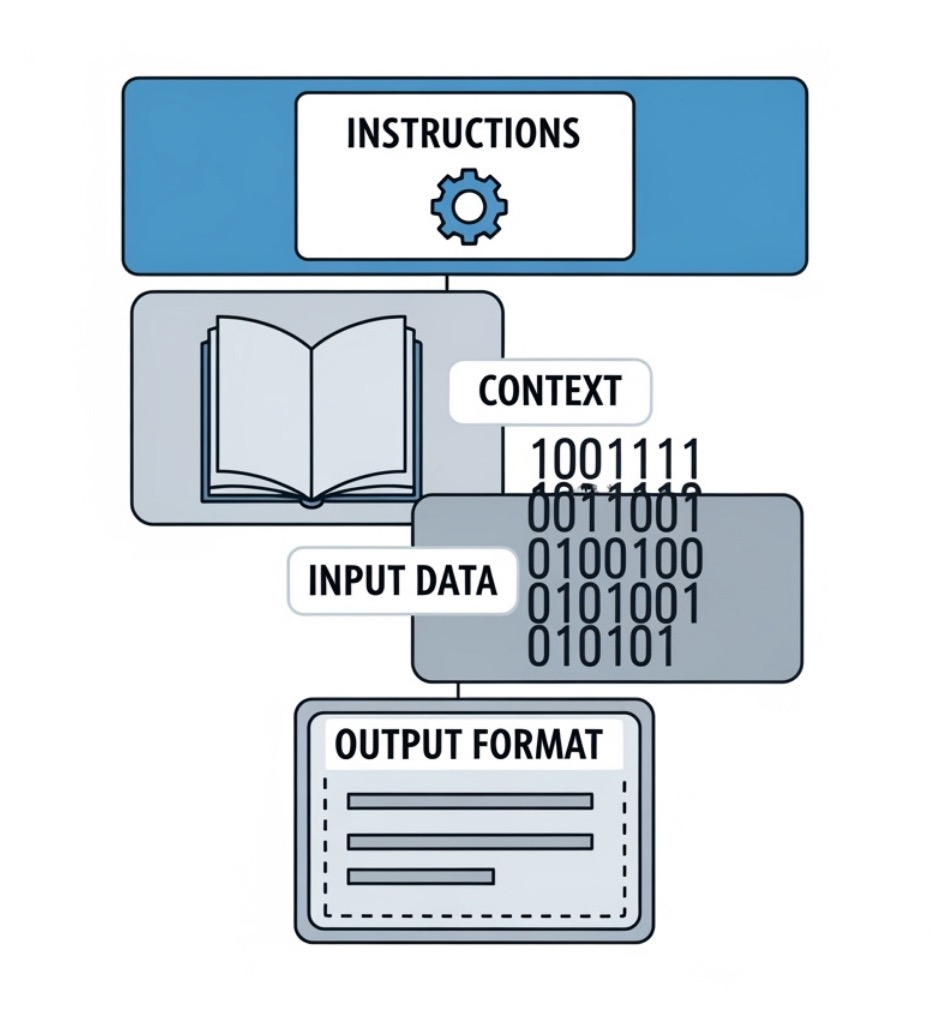

An effective prompt combines several key ingredients: a role or persona (e.g., a technical expert), context (background information), a clear task, constraints (boundaries), a desired output format (like a list or table), and examples to clarify complex requests.

When you combine these elements, you move from a vague request like "Write about AI" to a powerful one: "Act as a healthcare expert and write a 500-word article on how AI assists doctors in diagnosis, formatted with headings and bullet points for key benefits."

Creating the perfect prompt is an iterative process. Start simple, then refine based on the AI's output. The goal is clarity and precision. When the AI misunderstands, adjust your prompt and try again. For more insights, check out the best practices for prompt engineering with the OpenAI API.

Controlling Style, Persona, and Format

One of the most powerful aspects of prompt engineering is shaping how the AI responds. You can transform the AI's personality and output style with a few words.

Ask for a "conversational tone" for a friendly response or to "act as a senior software engineer" for technical expertise. The AI adapts its vocabulary and knowledge to match the requested persona. Research on marked personas in LLMs shows how effectively AI can adopt different roles, which also helps guide it away from generic, potentially biased responses.

The output format makes AI work for your specific needs. Ask for JSON for structured data, Markdown for formatted articles, or tables for organized information that you can export to spreadsheets. This control turns a general assistant into a specialized tool for your workflow.

Chat vs. Completion Models: Key Prompting Differences

Understanding the difference between chat and completion models is key. Completion models act like smart autocomplete, taking an input and generating a single continuation. They are best for straightforward, single-turn tasks.

Chat models are designed for multi-turn dialogue. They remember previous exchanges using a conversational context that includes system messages (overall instructions), user messages (your inputs), and assistant messages (the AI's past responses). This structure, partly based on OpenAI's Chat Markup Language approach, allows for coherent, back-and-forth conversations. The API differences between model types affect how you structure prompts, so knowing which you're using is crucial for getting good results.

The Importance of Validating AI Responses

Even the best prompts can't guarantee perfect AI responses, making validation essential. AI models can make convincing mistakes.

AI hallucinations are a major concern, where the AI states fabricated "facts" or cites non-existent sources. It's not lying, but generating plausible text without a concept of truth. Fact-checking is crucial, especially for important information. You can reduce hallucinations by asking the AI to cite sources or explain its reasoning.

Bias detection is also critical, as models can reflect biases from their training data. Validation involves checking for and minimizing these issues through prompt refinement.

Human oversight is irreplaceable. You are the final judge of the AI's accuracy and relevance. Think of AI as a powerful tool that amplifies your capabilities, not a replacement for critical thinking. The iterative refinement process—analyzing, adjusting, and retrying—is where real skill develops. Tools like SelfCheckGPT for detecting hallucinations can help, but your judgment is paramount.

Fundamental Prompting Techniques: From Zero to Few-Shot

Think of teaching a new colleague a task. Sometimes you give an instruction (zero-shot), sometimes you show one example (one-shot), and for complex tasks, you provide several examples (few-shot). This is in-context learning: teaching an AI model within the prompt itself, without retraining it.

- Zero-shot prompting gives the AI a task with no examples, relying on its existing knowledge.

- One-shot prompting adds a single example to clarify your expectations.

- Few-shot prompting provides multiple examples (usually 3-5) to help the AI recognize patterns for more accurate and consistent results, which is powerful for tasks like data extraction.

These techniques are foundational because they leverage the AI's natural learning abilities. Research on ChatGPT-4's Zero-Shot Learning performance shows impressive results even without examples, but highlights how much performance improves when we provide them.

Zero-Shot Prompting in Action

Zero-shot prompting is ideal for straightforward tasks where you need a quick response. For example, to analyze sentiment, you could prompt: "Classify the sentiment of the following text as positive, neutral, or negative: 'The new movie was okay, not great but not terrible.'" The AI should respond with "Neutral."

The strength of this method is its simplicity and flexibility. However, it can lack precision for complex tasks and may produce inconsistent outputs, as the AI relies entirely on its general training.

Use zero-shot prompting for simple tasks, initial exploration, or when speed is more important than perfect precision.

Mastering Few-Shot Prompting

For precision, accuracy, and consistency, few-shot prompting is your secret weapon. By showing the AI several high-quality examples, you give it a masterclass in what you want.

Imagine you need to extract customer information from orders. A few-shot prompt would look like this:

"Extract the customer's name, pizza type, and quantity from the following orders and present them in JSON format.

Order: 'I'd like a large pepperoni pizza, my name is John.'

JSON: {'customername': 'John', 'pizzatype': 'pepperoni', 'quantity': 'large'}

Order: 'Please deliver two medium cheese pizzas to Sarah.'

JSON: {'customername': 'Sarah', 'pizzatype': 'cheese', 'quantity': 'two medium'}

Order: 'Could I get one small veggie pizza? My name is Emily.'

JSON:"

The AI will follow the pattern and respond with: {'customer_name': 'Emily', 'pizza_type': 'veggie', 'quantity': 'one small'}

This technique also excels at style replication. To get the best results, provide high-quality, diverse, and consistently formatted examples. Clearly separate each input-output pair. As one expert notes, "The most important best practice is to provide (one shot / few shot) examples within a prompt." By crafting excellent examples, you turn a general AI into a specialized expert for your needs.

Advanced Strategies in this Comprehensive Prompt Engineering Guide

Once you've mastered the basics, advanced techniques can help you tackle complex problems and get more reliable results.

Chain-of-Thought (CoT) prompting teaches the AI to "show its work." By adding a simple phrase like "think step-by-step," you guide the AI to generate intermediate reasoning before its final answer. This improves accuracy on complex tasks like arithmetic and makes the AI's logic transparent, helping you spot errors. Research like Investigating Chain-of-thought with ChatGPT for Stance Detection explores its applications.

Self-Consistency builds on CoT by generating multiple reasoning paths for the same problem and selecting the most consistent answer via a "majority vote." This significantly improves the robustness of the final response.

Understanding Context Windows and Token Limits

AI models don't have unlimited memory. Understanding context windows and token limits is crucial. Words are broken down into "tokens" for processing. The context window is the AI's short-term memory—the number of tokens it can consider at once, including your prompt and its response. If a conversation exceeds this limit, older information is forgotten.

Context window sizes vary, from a few thousand tokens in older models to over 32,000 in newer ones. This limit affects prompt design. You must be token-efficient, providing necessary information without being overly verbose. For long conversations, summarization techniques can help keep the most relevant information within the window.

An advanced prompt engineering guide to complex tasks

For truly complex applications, two advanced techniques are invaluable.

Retrieval-Augmented Generation (RAG) addresses the fact that an LLM's knowledge is static. RAG connects the AI to an external knowledge base (like internal company documents or up-to-date articles). The AI first retrieves relevant information and then uses it to inform its response. This grounds the answer in factual, current data, significantly reducing hallucinations and ensuring accuracy.

Prompt Chaining breaks down large tasks into smaller, manageable sub-tasks. Each step is handled by a sequential prompt, where the output of one becomes the input for the next. For example, you could chain prompts to brainstorm product ideas, then outline a marketing plan, then write a press release. This structured workflow helps manage complexity and ensures high-quality results for multi-step processes.

Practical Applications and Real-World Scenarios

The power of prompt engineering is changing how we work across countless industries. Here are some of its most impactful real-world applications.

Content Creation

Well-crafted prompts are revolutionizing content workflows. For SEO optimization, AI can generate keyword-rich articles and product descriptions. In scriptwriting, it helps draft video scripts and podcast outlines. It also streamlines email campaigns by automating responses and drafting personalized outreach messages, turning hours of work into minutes.

Software Development

Prompt engineering is becoming essential for developers. Code generation is now highly sophisticated, with AI writing functions, creating scripts, and translating between languages to speed up development. For debugging, AI can identify errors, suggest fixes, and explain complex code in plain English. An Analysis on Code Generation Capabilities shows how advanced this has become.

Education

The education sector is using prompt engineering to create more accessible personalized learning. AI can generate custom study materials and practice questions adapted to a student's understanding. Educators also save time by using prompts to create quizzes and discussion topics for any subject.

Business

Professionals are using prompt engineering to manage information overload. For market analysis, AI can summarize long reports and extract key trends from industry data. Report summarization is another game-changer, condensing financial or project reports into actionable insights. Companies are leveraging these capabilities for marketing, public relations, and customer relations.

These applications are just the beginning. As prompt engineering skills grow, the possibilities for leveraging AI become nearly limitless, though each use case requires a custom approach.

Frequently Asked Questions about Prompt Engineering

As you start your journey, some common questions may arise. Here are answers to the most frequent ones.

How long does it take to learn prompt engineering?

You can learn the basics in just a few hours. The core skills are writing clear instructions and providing good context. However, mastery is an ongoing journey. Like any craft, it requires practice and experimentation.

Prompt engineering is iterative, and you'll constantly test and refine your work. Your skills will grow with each project. The good news is that community resources accelerate your learning, as you can build upon the proven work of others.

What is the most difficult part of prompt engineering?

While anyone can write a prompt, crafting an effective one can be complex. The biggest challenges involve nuance and subtlety.

- Sensitivity: AI models are highly responsive to small changes in wording, which can transform the output in unexpected ways.

- Model Limitations: Understanding that LLMs can make factual errors (hallucinations), struggle with certain tasks, and reflect biases is crucial.

- Complexity: Crafting prompts for multi-step tasks requires breaking down problems into logical sequences, which demands systematic refinement.

The work is iterative, and you rarely get it perfect on the first try. Each refinement teaches you more about communicating effectively with AI.

Conclusion: Evolve Your Skills and Collaborate

This prompt engineering guide has covered the essentials, from basic components to advanced strategies like Chain-of-Thought and RAG. You've learned to craft clear instructions, use context, apply zero-shot and few-shot techniques, and validate AI responses for accuracy—practical skills for any industry.

But the journey doesn't end here. Prompt engineering is evolving from a solo skill into a collaborative craft. The AI landscape changes rapidly, and staying ahead means continuous learning. The future isn't about isolated trial and error, but about building on the collective wisdom of a community.

This is where collaboration becomes a game-changer. Imagine having instant access to hundreds of proven, community-refined prompts that you can remix for your own needs.

At Potions, we believe prompt engineering is a team sport. Our community-powered AI prompt marketplace transforms isolated experimentation into a collaborative journey. Every prompt is automatically saved and versioned with a stable URL, making it easy to track changes, experiment, and build upon the work of others.

Whether you're a beginner or an expert, the power of community amplifies your capabilities. You can find new techniques, avoid common pitfalls, and contribute to a growing library of AI expertise. Your path to mastery is more effective when shared. Find collaborative prompting features and start evolving your skills with a community of AI enthusiasts today.

The future of AI interaction is collaborative. Your journey to becoming a prompt engineering ninja starts now.