Dive Deep: Unearthing the Best Prompt Engineering Repositories

The Power of Prompt Engineering Repositories.

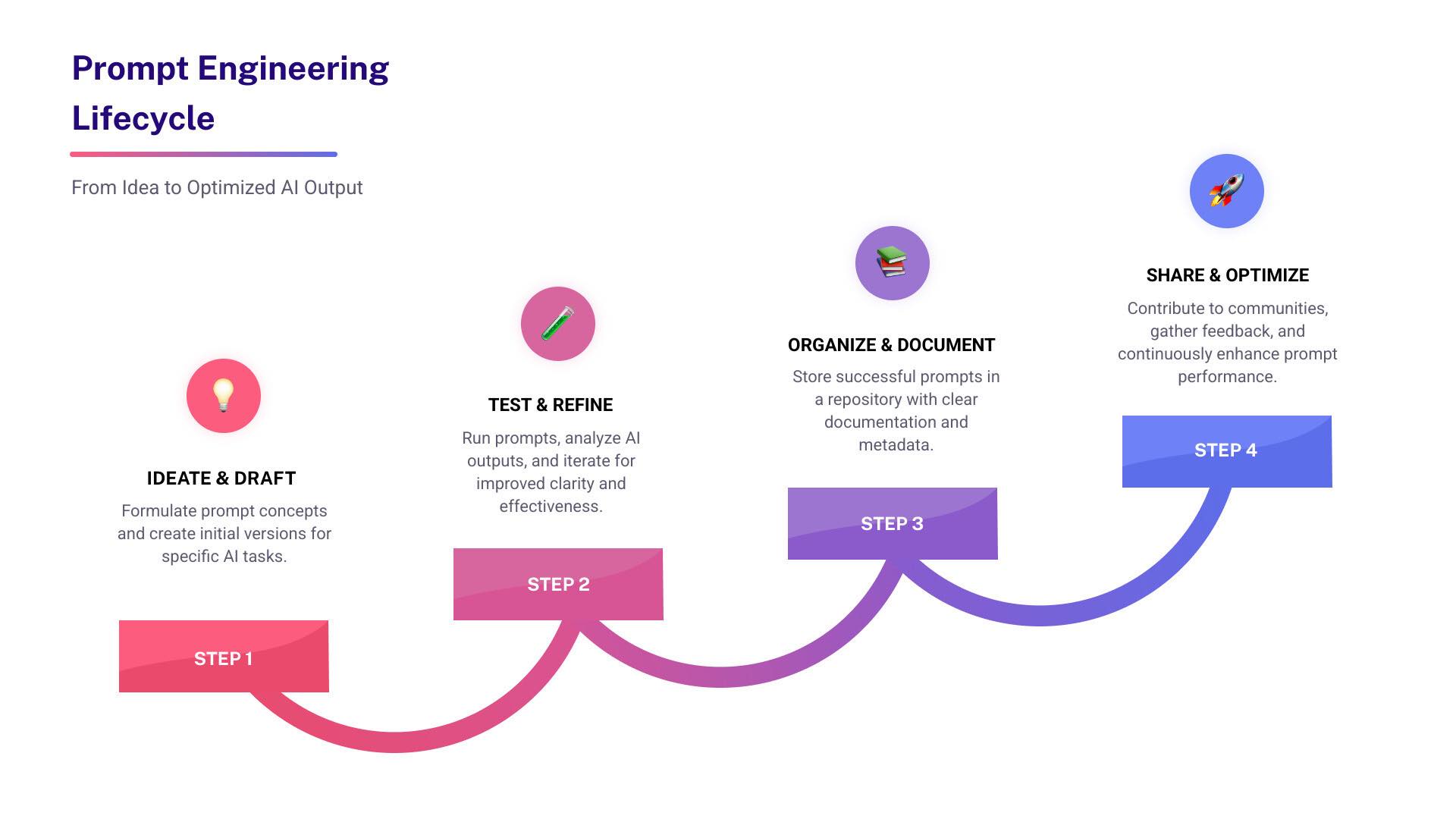

A Prompt engineering repository is a crucial tool for anyone serious about AI. It's a central place where you can find, organize, and share prompts. Think of it as a digital library for talking to AI.

Here's what a prompt engineering repository helps you do:

- Find Prompts: Find pre-made prompts for many tasks.

- Learn Best Practices: See examples of what works well.

- Improve AI Outputs: Craft better instructions for your AI tools.

- Organize Your Work: Keep all your best prompts in one spot.

- Collaborate: Share and build on prompts with others.

Many professionals today use AI in their daily work. But getting the right output can feel like a puzzle. You might spend hours trying new prompts, hoping for a better answer. Or you lose that perfect prompt you created last week.

This frustration is common. You suspect others have already solved similar problems, but where do you find their solutions? And how do you keep your own prompt library neat and tidy?

That's where a prompt engineering repository shines. It moves prompt creation from a guessing game to a smart, shared process. It's about turning your individual efforts into a powerful, community-driven resource. This makes getting great AI results much easier and faster.

What Makes a Great Prompt Engineering Repository?

At its heart, a prompt engineering repository is like a super-organized digital library just for AI prompts. Its main goal? To help us get the most out of large language models (LLMs) by offering prompts that are structured, clearly explained, and super easy to find. Imagine trying to find a specific book in a library where everything's just piled up - that's what managing prompts without a repository can feel like! A great repository clears away that chaos, making finding and using the perfect prompt a walk in the park.

So, what makes one repository truly shine? It’s all about making prompts easy to find, use, and improve, whether you're just starting or you're an AI wizard. A truly great prompt engineering repository shines with its structured organization, robust version control, vibrant community contributions, and an overall ease of use that makes learning and collaboration a breeze.

Core Components of a Public Prompt Engineering Repository

When we talk about a really useful prompt engineering repository, a few key ingredients come to mind:

First up is structured organization. This is huge! A messy pile of prompts isn't much better than no prompts at all. The best repositories sort prompts logically, making it simple to steer. You'll often see prompts tucked into categories like:

- Personas: These prompts tell the AI to act like a specific character or take on a certain role. Think "Mock Interviewer," "Sports Coach," or even fun "Fictional Characters."

- Functions: These are prompts designed to do specific jobs or create output from text you give them. Examples include "Formal Rephrase" or "Title Suggest," covering everything from "Text Generation" to "Linguistics."

- Modifiers: These prompts change how the AI's output sounds or looks, adjusting things like tone, style, or format. Ever wanted something explained "ELI5" (Explain Like I'm 5) or in a "Limerick Styled" poem? That's what modifiers are for, helping with everything from "Output Formatting" to "Text Styling."

This smart organization helps us quickly grab the exact type of prompt we need, without sifting through endless examples.

Beyond categories, powerful search capabilities are a must. We should be able to find prompts by keywords, what they're used for, or even which AI model they work best with.

Every prompt also needs clear documentation. This means a straightforward explanation of what the prompt does, how to use it, and what kind of answer you can expect. It also includes any special settings or variables you can tweak.

And finally, usage examples are incredibly helpful. Seeing prompts in action makes all the difference. The best repositories show you the prompt's input and the AI's output side-by-side. This clearly illustrates how effective the prompt is and what you can do with it. It’s vital for understanding the subtle art of prompt engineering, where even a tiny change in words can vastly improve the AI's response.

The Power of Collaboration and Version Control

The real magic of a prompt engineering repository, especially for a platform that encourages teamwork, comes from its ability to build a community and use version control. Prompt engineering is quickly moving from a solo skill to a team sport!

With a strong version control system, every prompt is automatically saved and tracked. This lets us see how a prompt has changed over time, understand its journey, and even go back to earlier versions if something doesn't work out. It's like how software developers manage their code, ensuring a clear history and accountability.

This means we can experiment freely without worrying about losing our original work. We can tweak a prompt, test it out, and if it's not quite right, easily switch back to a stable version.

The ability to build on others' work or remix existing prompts is a game-changer. We don't have to start from scratch every time. We can take a proven prompt, adjust it for our specific needs, and then share our improved version back with the community. This speeds up both innovation and learning. For instance, community-driven resources like the Prompt Engineering Guide on GitHub have earned thousands of stars, showing how much people appreciate shared resources and the incredible power of collective knowledge.

Sharing expertise turns repositories into living knowledge hubs where everyone's AI know-how grows. When we share our successful prompts and techniques, we add to a bigger pool of knowledge that helps everyone. This collaborative spirit ensures that the field of AI prompting keeps moving forward at a rapid pace.

Here are some key benefits that make collaborative prompt development so powerful:

- Accelerated Learning: Newcomers can quickly pick up best practices by studying successful prompts and how they've evolved.

- Reduced Duplication of Effort: Why reinvent the wheel when someone else has already crafted an amazing prompt for a similar task?

- Improved Quality: Prompts can be reviewed and refined by many contributors, leading to stronger, more effective solutions.

- Broader Application: A prompt made for one specific use can be remixed and adapted for countless others, making it even more useful.

- Community Building: Working together creates a sense of community among prompt engineers, encouraging discussions and shared problem-solving.

How to Leverage a Repository for Skill Development

A well-designed prompt engineering repository isn't just a place to store prompts. Think of it as a powerful learning playground! No matter if you're just starting your AI journey or you're already a pro, these repositories offer clear paths to boost your skills and understanding.

Learning by Example

One of the best ways to learn prompt engineering is by diving into existing, high-quality prompts. Repositories are like a treasure trove of real-world examples:

You can start by deconstructing complex prompts. This means taking apart instructions crafted by experienced prompt engineers. You'll see how they use special markers called delimiters, add important context, and guide the AI to get exactly what they want. It’s like peeking behind the curtain to find their best tricks!

Beyond single prompts, a good prompt engineering repository helps you spot repeating "prompt patterns." These are like popular recipes that solve common AI problems. For instance, the "Act As" pattern tells the AI to pretend to be someone (like "Act as a professional psychologist"). Other patterns include "Scaffolding," which breaks big tasks into smaller steps, or "Template," which makes sure the AI's answer follows a strict format. Learning these patterns gives you a deeper, more useful skill than just memorizing prompts.

The organized nature and version control found in a great prompt engineering repository also make it easy to A/B test prompts. You can take two slightly different versions of a prompt, try them out, and see which one gives you better results. This helps you fine-tune your prompts, turning prompt creation from a guessing game into a more scientific process.

For those eager to grow, many guides, set out clear beginner to advanced paths. You can start with simple ideas like zero-shot (asking the AI without examples) or few-shot (giving a few examples) prompting. Then, you can gradually move to more advanced techniques like Chain-of-Thought (asking the AI to think step-by-step) or Retrieval-Augmented Generation (RAG), which helps the AI use outside information. The best repositories make sure there's always something new to learn, no matter your skill level.

Finding Prompts for Specific Tasks

A well-organized prompt engineering repository makes finding exactly what you need incredibly simple. It's like having a super-smart librarian for your AI prompts!

You can easily use filters and categories. Need a prompt where the AI acts like a specific character? Filter by Personas. Looking for prompts that perform actions like summarizing or rephrasing? Check out Functions. Want to change the tone or style of an AI's output? Look at Modifiers. You can also search by topics like "Software Development" or "Education."

It's also easy to search by use case. Whether you need help with code generation for a new program, ideas for content creation like catchy headlines, or assistance with data analysis to understand complex information, these repositories have you covered. You can even find specialized prompts for fun role-playing, like a "RecipeBot" to plan dinner or a "TechSupportBot" to troubleshoot problems.

Once you find a prompt that's close to what you need, you can easily adapt prompts for unique needs. This might mean changing a few words in a template (like replacing [NAME] with a real name). Or, you might combine parts from different prompts to create a completely custom solution that fits your exact project.

How to Contribute to a Prompt Engineering Repository

The real magic of a community-powered prompt engineering repository comes from everyone chipping in. When you share your successful prompts and ideas, you make the whole AI world smarter! Here's how you can get involved:

If you use platforms like GitHub, you can often "fork" a repository. This means you make your own copy of the project where you can play around and make changes. Once you've improved a prompt or found a better way to do something, you can "submit pull requests." This is how you suggest your changes to the main repository, asking for them to be included. Many project owners are really happy to get feedback and suggestions!

Even if you're not adding brand new prompts, providing feedback on existing ones is super valuable. Letting others know if a prompt is clear, effective, or could be better helps everyone. And if you've created a prompt that works wonders for a specific task, sharing successful prompts (with clear instructions and examples) means others can benefit from your hard work. This is how our shared AI knowledge keeps growing!

You can also join community discussions. Many repositories have chat groups, forums, or other places where people talk about AI. Joining in is a fantastic way to learn, ask questions, and help shape the future of prompt engineering.

Finally, like any community project, following contribution guidelines is important. These rules help keep everything organized and high-quality. Just be mindful of any rules about how you can use or build on other people's work, ensuring everyone gets proper credit.

Advanced Techniques Found in Top Repositories

As we dig deeper into prompt engineering, repositories become indispensable for exploring cutting-edge techniques and emerging trends. These aren't just about tweaking a few words; they involve sophisticated strategies to elicit more intelligent, nuanced, and reliable responses from LLMs. Think of it as moving from basic conversation to mastering the art of persuasion with AI!

A great prompt engineering repository doesn't just store prompts; it often breaks down and explains these powerful methods. Let's look at some of the advanced techniques you'll find cataloged and explained:

First up is Chain-of-Thought (CoT) Prompting. Imagine you're asking a student to solve a math problem. Instead of just wanting the answer, you ask them to "show their work." That's CoT! You instruct the AI to think step-by-step before giving its final answer. This simple instruction often leads to much more accurate results, especially for tricky problems that require logical reasoning.

Then there are the fundamental ways we teach AI. Zero-shot Learning is the most basic – you give the AI a task, and it tries to do it without any examples. It just uses what it already knows. Building on that, Few-shot Learning is like giving the AI a few good examples within your prompt. These examples act like mini-lessons, helping the model understand exactly the kind of response you're looking for, whether it's a specific format or style.

One of the most powerful techniques is Retrieval-Augmented Generation (RAG). Think of it this way: LLMs are incredibly smart, but their knowledge is limited to what they learned during training. What if you need them to use current data, or information from your private company documents? RAG lets the AI look up information from an external knowledge base before it generates a response. This is huge for making sure the AI gives factual, up-to-date answers and avoids making things up (what we call "hallucinations"). Many repositories even show you how to set up RAG pipelines!

Another clever trick is Self-Consistency. For complex problems, instead of asking the AI for just one answer, you prompt it to come up with several different reasoning paths. Then, you combine or compare these paths to find the most robust and accurate final answer. It’s like getting opinions from several experts and then finding the common ground.

Beyond these core advanced methods, comprehensive repositories often explore even more cutting-edge strategies. You might find explanations for Tree of Thoughts (ToT), which is an even more advanced version of CoT where the AI explores many reasoning paths and picks the best one. There's also Automatic Reasoning and Tool-use (ART), where LLMs learn to use external tools like calculators or search engines to solve problems. And believe it or not, there's even Automatic Prompt Engineer (APE), where LLMs actually help you create and optimize prompts!

You'll also come across techniques like Negative Prompting, which is popular in image generation but also useful for text. This is where you explicitly tell the AI what not to do or what kind of output to avoid. For multi-step processes, you'll see Prompt Chaining and Sequencing, where one prompt's output becomes the next prompt's input, creating a smooth workflow. And underlying it all is Instruction Engineering, the ongoing art of crafting super clear and precise instructions to get the best out of your AI, minimizing any misunderstandings.

These advanced techniques, and many more, are often explored in depth within academic papers and research projects.

Frequently Asked Questions about Prompt Repositories

It's perfectly normal to have questions about prompt engineering repositories, especially if you're just starting your journey with AI. Let's tackle some of the most common ones we hear:

What are prompt patterns and why are they important?

Imagine you're building with LEGOs. You could just randomly stick bricks together, or you could follow a blueprint for a fantastic castle! Prompt patterns are very much like those blueprints for your AI conversations. They're reusable solutions or "recipes" for structuring your prompts to get specific, desired outcomes from an LLM.

Why are they so important? Well, they help us move beyond simply guessing and trying things out. Instead, they provide a much more systematic and predictable way to create effective prompts. This means less trial-and-error and more consistent results! For example, the "Persona" pattern, where you tell the AI to "Act as an expert..." is super versatile. You can use it whether you need a prompt that acts as a wise psychologist, an energetic sports coach, or a savvy financial advisor. Understanding these core patterns helps make prompt engineering more efficient and scalable, especially since the AI world is changing so quickly! They truly offer a guiding light, no matter which specific LLM model you happen to be using.

How do repositories handle different AI models?

That's a fantastic question, because as you might guess, what works perfectly on one AI model might need a little tweak for another! Many prompt engineering repositories are quite savvy about this. Some will categorize prompts specifically by the AI model they work best on (think "GPT-4 specific prompts" or "LLaMA-optimized prompts"). Others focus on more general prompt patterns that are designed to be adaptable across various models.

The key here is adaptability. While a prompt might be a great starting point, you might need to fine-tune it slightly for your chosen LLM. A truly helpful repository will often include notes or examples showing how these variations might work, or offer best practices for adapting prompts. The field is constantly evolving, with researchers always working to make prompts that generalize even better across all the different LLMs out there.

What are the risks of using public prompts?

Prompt engineering repositories are incredible treasure chests of knowledge, but just like any shared resource, it's smart to be aware of a few potential pitfalls when using public prompts:

Potential Biases: Prompts, like any human-made content, can sometimes carry hidden biases from their creators or the vast data they were trained on. If a prompt is designed with biased language or assumptions, the AI's output might unfortunately reflect and even amplify those biases. Always be mindful!

Outdated Techniques: The world of AI moves at warp speed! A prompt that was great last year might be less effective, or even completely obsolete, today. This could be due to new model updates or breakthrough research. The best repositories are actively maintained to keep their content fresh and relevant.

Adversarial Purposes: While the vast majority of prompts in reputable repositories are designed for beneficial uses, it's worth knowing that some prompts can be crafted for "adversarial prompting." This means they're intended to exploit vulnerabilities in LLMs, possibly generating harmful content or bypassing safety filters. It's a rare risk, but one to be aware of.

Quality Variation: Not every public prompt is a masterpiece. Some might be poorly designed, inefficient, or simply not as effective as they claim to be.

Because of these points, it's absolutely crucial to always test and adapt public prompts carefully before using them in production environments or for any critical tasks. Never blindly trust an AI's output! Always verify information, especially if the prompt asks the model to "quote sources" or "fact-check itself." The best practice is to understand the prompt's intent, thoroughly test its behavior, and then refine it to meet your specific quality and safety standards. It's all about building on others' great work, but doing so smartly and safely!

Evolve Your Prompts from Solo Art to Collaborative Science

We've journeyed through prompt engineering repositories and found something remarkable. These aren't just digital filing cabinets for AI instructions. They're transformative platforms that change how we work with artificial intelligence entirely.

Think about how you probably started with prompts. Maybe you typed a question into ChatGPT, didn't love the answer, and tried rewording it. Then you tried again. And again. Sound familiar? That's the solo art approach - you against the blank prompt box, hoping to stumble upon the magic words.

But here's what we've learned: the future belongs to collaborative science. Instead of everyone reinventing the wheel in isolation, we're building something bigger together. When you share a breakthrough prompt, someone else improves it. When they share their version, another person adapts it for a completely different use case. Suddenly, that simple question you started with becomes part of a living, breathing knowledge base.

This shift from individual effort to community knowledge isn't just nice to have - it's essential. AI moves fast. Really fast. The techniques that worked last month might be outdated next week. But when we pool our findies, test each other's ideas, and build on proven foundations, we all move forward together.

The magic happens when version control meets human creativity. Every tweak gets saved. Every experiment has a home. Every "what if I tried this instead?" moment becomes part of the story. You're not just crafting prompts anymore - you're contributing to a shared understanding of how to communicate with AI effectively.

This collaborative approach doesn't just make us better prompt engineers. It makes AI itself more useful for everyone. When we document what works, share our failures, and celebrate our breakthroughs together, we're essentially teaching the world how to speak AI fluently.

Ready to be part of this evolution? The future of prompt engineering is collaborative, and it's happening right now. Explore the prompt marketplace and see how your next great prompt idea can become part of something much bigger than yourself.